There are a lot of barriers to understanding AI models, some of them pretty big barriers, and they can stand in the way of implementing AI processes. But the first one many people encounter is understanding what we mean when talking about tokens.

One of the most important practical parameters in choosing an AI language model is the size of its context window — the maximum input text size — which is given in tokens, not words or characters or any other automatically recognizable unit.

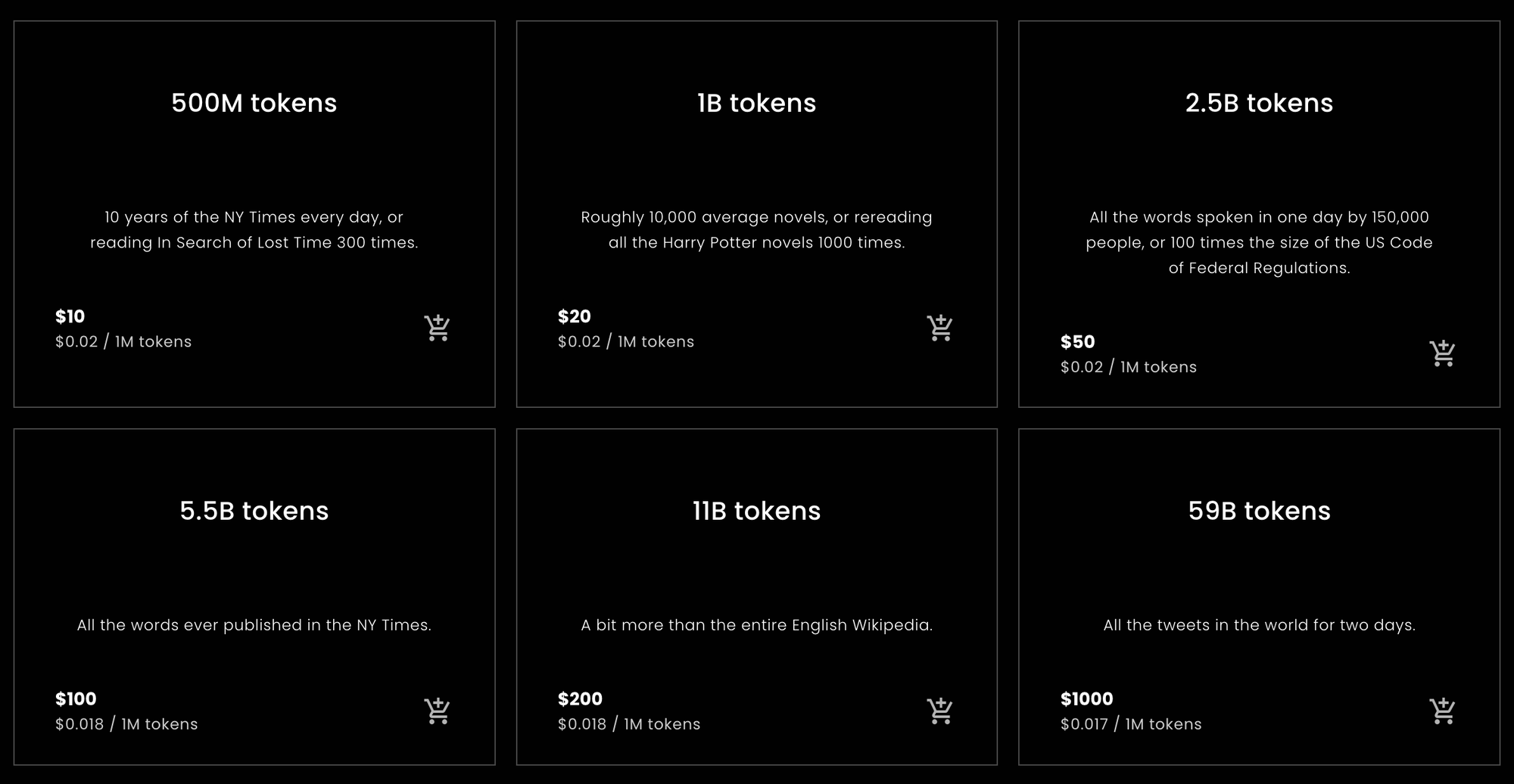

Furthermore, embedding services are typically figured “per token,” meaning tokens are important to understanding your bill.

This can be very confusing if you aren’t clear about what a token is.

But of all the confusing aspects of modern AI, tokens are probably the least complicated. This article will try to clarify what tokenization is, what it does, and why we do it that way.

tl;dr

For those who want or need a quick answer to figure out how many tokens to buy from Jina Embeddings or an estimate of how many they need to expect to buy, the following statistics are what you're looking for.

Tokens per English Word

During empirical testing, described further down in this article, a variety of English texts converted into tokens at a rate of about 10% more tokens than words, using Jina Embeddings English-only models. This result was pretty robust.

Jina Embeddings v2 models have a context window of 8192 tokens. This means that if you pass a Jina model an English text longer than 7,400 words, there is a good chance it will be truncated.

Tokens per Chinese Character

For Chinese, results are more variable. Depending on the text type, ratios varied from 0.6 to 0.75 tokens per Chinese character (汉字). English texts given to Jina Embeddings v2 for Chinese produce approximately the same number of tokens as Jina Embeddings v2 for English: roughly 10% more than the number of words.

Tokens per German Word

German word-to-token ratios are more variable than English but less than Chinese. Depending on the genre of the text, I got 20% to 30% more tokens than words on average. Giving English texts to Jina Embeddings v2 for German and English uses a few more tokens than the English-only and Chinese/English models: 12% to 15% more tokens than words.

Caution!

These are simple calculations, but they should be approximately right for most natural language texts and most users. Ultimately, we can only promise that the number of tokens will always be no more than the number of characters in your text, plus two. It will practically always be much less than that, but we cannot promise any specific count in advance.

These are estimates based on statistically naive calculations. We do not guarantee how many tokens any particular request will take.

If all you need is advice on how many tokens to buy for Jina Embeddings, you can stop here. Other embedding models, from companies other than Jina AI, may not have the same token-to-word and token-to-Chinese-character ratios Jina models have, but they will not generally be very different overall.

If you want to understand why, the rest of this article is a deeper dive into tokenization for language models.

Words, Tokens, Numbers

Tokenization has been a part of natural language processing for longer than modern AI models have existed.

It’s a bit cliché to say that everything in a computer is just a number, but it’s also mostly true. Language, however, is not naturally just a bunch of numbers. It might be speech, made of sound waves, or writing, made of marks on paper, or even an image of a printed text or a video of someone using sign language. But most of the time, when we talk about using computers to process natural language, we mean texts composed of sequences of characters: letters (a, b, c, etc.), numerals (0, 1, 2…), punctuation, and spaces, in different languages and textual encodings.

Computer engineers call these “strings”.

AI language models take sequences of numbers as input. So, you might write the sentence:

What is today's weather in Berlin?

But, after tokenization, the AI model gets as input:

[101, 2054, 2003, 2651, 1005, 1055, 4633, 1999, 4068, 1029, 102]

Tokenization is the process of converting an input string into a specific sequence of numbers that your AI model can understand.

When you use an AI model via a web API that charges users per token, each request is converted into a sequence of numbers like the one above. The number of tokens in the request is the length of that sequence of numbers. So, asking Jina Embeddings v2 for English to give you an embedding for “What is today's weather in Berlin?” will cost you 11 tokens because it converted that sentence into a sequence of 11 numbers before passing it to the AI model.

AI models based on the Transformer architecture have a fixed-size context window whose size is measured in tokens. Sometimes this is called an “input window,” “context size,” or “sequence length” (especially on the Hugging Face MTEB leaderboard). It means the maximum text size that the model can see at one time.

So, if you want to use an embedding model, this is the maximum input size allowed.

Jina Embeddings v2 models all have a context window of 8,192 tokens. Other models will have different (typically smaller) context windows. This means that however much text you put into it, the tokenizer associated with that Jina Embeddings model must convert it into no more than 8,192 tokens.

Mapping Language to Numbers

The simplest way to explain the logic of tokens is this:

For natural language models, the part of a string that a token stands for is a word, a part of a word, or a piece of punctuation. Spaces are not generally given any explicit representation in tokenizer output.

Tokenization is part of a group of techniques in natural language processing called text segmentation, and the module that performs tokenization is called, very logically, a tokenizer.

To show how tokenization works, we’re going to tokenize some sentences using the smallest Jina Embeddings v2 for English model: jina-embeddings-v2-small-en. Jina Embeddings’ other English-only model — jina-embeddings-v2-base-en — uses the same tokenizer, so there’s no point in downloading extra megabytes of AI model that we won’t use in this article.

First, install the transformers module in your Python environment or notebook. Use the -U flag to make sure you upgrade to the latest version because this model will not work with some older versions:

pip install -U transformers

Then, download jina-embeddings-v2-small-en using AutoModel.from_pretrained:

from transformers import AutoModel

model = AutoModel.from_pretrained('jinaai/jina-embeddings-v2-small-en', trust_remote_code=True)

To tokenize a string, use the encode method of the tokenizer member object of the model:

model.tokenizer.encode("What is today's weather in Berlin?")

The result is a list of numbers:

[101, 2054, 2003, 2651, 1005, 1055, 4633, 1999, 4068, 1029, 102]

To convert these numbers back to string forms, use the convert_ids_to_tokens method of the tokenizer object:

model.tokenizer.convert_ids_to_tokens([101, 2054, 2003, 2651, 1005, 1055, 4633, 1999, 4068, 1029, 102])

The result is a list of strings:

['[CLS]', 'what', 'is', 'today', "'", 's', 'weather', 'in',

'berlin', '?', '[SEP]']

Note that the model’s tokenizer has:

- Added

[CLS]at the beginning and[SEP]at the end. This is necessary for technical reasons and means that every request for an embedding will cost two extra tokens, above however many tokens the text takes. - Split punctuation from words, turning “Berlin?” into:

berlinand?, and “today’s” intotoday,', ands. - Put everything in lowercase. Not all models do this, but this can help with training when using English. It may be less helpful in languages where capitalization has a different meaning.

Different word-counting algorithms in different programs might count the words in this sentence differently. OpenOffice counts this as six words. The Unicode text segmentation algorithm (Unicode Standard Annex #29) counts seven words. Other software may come to other numbers, depending on how they handle punctuation and clitics like “’s.”

The tokenizer for this model produces nine tokens for those six or seven words, plus the two extra tokens needed with every request.

Now, let’s try with a less common place-name than Berlin:

token_ids = model.tokenizer.encode("I live in Kinshasa.")

tokens = model.tokenizer.convert_ids_to_tokens(token_ids)

print(tokens)

The result:

['[CLS]', 'i', 'live', 'in', 'kin', '##sha', '##sa', '.', '[SEP]']

The name “Kinshasa” is broken up into three tokens: kin, ##sha, and ##sa. The ## indicates that this token is not the beginning of a word.

If we give the tokenizer something completely alien, the number of tokens over the number of words increases even more:

token_ids = model.tokenizer.encode("Klaatu barada nikto")

tokens = model.tokenizer.convert_ids_to_tokens(token_ids)

print(tokens)

['[CLS]', 'k', '##la', '##at', '##u', 'bar', '##ada', 'nik', '##to', '[SEP]']

Three words become eight tokens, plus the [CLS] and [SEP] tokens.

Tokenization in German is similar. With the Jina Embeddings v2 for German model, we can tokenize a translation of "What is today's weather in Berlin?" the same way as with the English model.

german_model = AutoModel.from_pretrained('jinaai/jina-embeddings-v2-base-de', trust_remote_code=True)

token_ids = german_model.tokenizer.encode("Wie wird das Wetter heute in Berlin?")

tokens = german_model.tokenizer.convert_ids_to_tokens(token_ids)

print(tokens)

The result:

['<s>', 'Wie', 'wird', 'das', 'Wetter', 'heute', 'in', 'Berlin', '?', '</s>']

This tokenizer is a little bit different from the English one in that <s> and </s> replace [CLS] and [SEP] but serve the same function. Also, the text is not case-normalized — upper and lower cases remain as written — because capitalization is meaningful in German differently from English.

(To simplify this presentation, I removed a special character indicating a word's beginning.)

Now, let’s try a more complex sentence from a newspaper text:

Ein Großteil der milliardenschweren Bauern-Subventionen bleibt liegen – zu genervt sind die Landwirte von bürokratischen Gängelungen und Regelwahn.

sentence = """

Ein Großteil der milliardenschweren Bauern-Subventionen

bleibt liegen – zu genervt sind die Landwirte von

bürokratischen Gängelungen und Regelwahn.

"""

token_ids = german_model.tokenizer.encode(sentence)

tokens = german_model.tokenizer.convert_ids_to_tokens(token_ids)

print(tokens)The tokenized result:

['<s>', 'Ein', 'Großteil', 'der', 'mill', 'iarden', 'schwer',

'en', 'Bauern', '-', 'Sub', 'ventionen', 'bleibt', 'liegen',

'–', 'zu', 'gen', 'ervt', 'sind', 'die', 'Landwirte', 'von',

'büro', 'krat', 'ischen', 'Gän', 'gel', 'ungen', 'und', 'Regel',

'wahn', '.', '</s>']

Here, you see that many German words were broken up into smaller pieces and not necessarily along the lines licensed by German grammar. The result is that a long German word that would count as just one word to a word counter might be any number of tokens to Jina’s AI model.

Let’s do the same in Chinese, translating ”What is today's weather in Berlin?” as:

柏林今天的天气怎么样?

chinese_model = AutoModel.from_pretrained('jinaai/jina-embeddings-v2-base-zh', trust_remote_code=True)

token_ids = chinese_model.tokenizer.encode("柏林今天的天气怎么样?")

tokens = chinese_model.tokenizer.convert_ids_to_tokens(token_ids)

print(tokens)

The tokenized result:

['<s>', '柏林', '今天的', '天气', '怎么样', '?', '</s>']

In Chinese, there are usually no word breaks in written text, but the Jina Embeddings tokenizer frequently joins multiple Chinese characters together:

| Token string | Pinyin | Meaning |

|---|---|---|

| 柏林 | Bólín | Berlin |

| 今天的 | jīntiān de | today’s |

| 天气 | tiānqì | weather |

| 怎么样 | zěnmeyàng | how |

Let’s use a more complex sentence from a Hong Kong-based newspaper:

sentence = """

新規定執行首日,記者在下班高峰前的下午5時來到廣州地鐵3號線,

從繁忙的珠江新城站啟程,向機場北方向出發。

"""

token_ids = chinese_model.tokenizer.encode(sentence)

tokens = chinese_model.tokenizer.convert_ids_to_tokens(token_ids)

print(tokens)

(Translation: “On the first day that the new regulations were in force, this reporter arrived at Guangzhou Metro Line 3 at 5 p.m., during rush hour, having departed the Zhujiang New Town Station heading north towards the airport.”)

The result:

['<s>', '新', '規定', '執行', '首', '日', ',', '記者', '在下', '班',

'高峰', '前的', '下午', '5', '時', '來到', '廣州', '地', '鐵', '3',

'號', '線', ',', '從', '繁忙', '的', '珠江', '新城', '站', '啟',

'程', ',', '向', '機場', '北', '方向', '出發', '。', '</s>']

These tokens do not map to any specific dictionary of Chinese words (词典). For example, “啟程” - qǐchéng (depart, set out) would typically be categorized as a single word but is here split into its two constituent characters. Similarly, “在下班” would usually be recognized as two words, but with the split between “在” - zài (in, during) and “下班” - xiàbān (the end of the workday, rush hour), not between “在下” and “班” as the tokenizer has done here.

In all three languages, the places where the tokenizer breaks the text up are not directly related to the logical places where a human reader would break them.

This is not a specific feature of Jina Embeddings models. This approach to tokenization is almost universal in AI model development. Although two different AI models may not have identical tokenizers, in the current state of development, they will practically all use tokenizers with this kind of behavior.

The next section will discuss the specific algorithm used in tokenization and the logic behind it.

Why Do We Tokenize? And Why This Way?

AI language models take as input sequences of numbers that stand in for text sequences, but a bit more happens before running the underlying neural network and creating an embedding. When presented with a list of numbers representing small text sequences, the model looks each number up in an internal dictionary that stores a unique vector for each number. It then combines them, and that becomes the input to the neural network.

This means that the tokenizer must be able to convert any input text we give it into tokens that appear in the model’s dictionary of token vectors. If we took our tokens from a conventional dictionary, the first time we encountered a misspelling or a rare proper noun or foreign word, the whole model would stop. It could not process that input.

In natural language processing, this is called the out-of-vocabulary (OOV) problem, and it’s pervasive in all text types and all languages. There are a few strategies for addressing the OOV problem:

- Ignore it. Replace everything not in the dictionary with an “unknown” token.

- Bypass it. Instead of using a dictionary that maps text sequences to vectors, use one that maps individual characters to vectors. English only uses 26 letters most of the time, so this must be smaller and more robust against OOV problems than any dictionary.

- Find frequent subsequences in the text, put them in the dictionary, and use characters (single-letter tokens) for whatever is left.

The first strategy means that a lot of important information is lost. The model can’t even learn about the data it’s seen if it takes the form of something not in the dictionary. A lot of things in ordinary text are just not present in even the largest dictionaries.

The second strategy is possible, and researchers have investigated it. However, it means that the model has to accept a lot more input and has to learn a lot more. This means a much bigger model and much more training data for a result that has never proven to be any better than the third strategy.

AI language models pretty much all implement the third strategy in some form. Most use some variant of the Wordpiece algorithm [Schuster and Nakajima 2012] or a similar technique called Byte-Pair Encoding (BPE). [Gage 1994, Senrich et al. 2016] These algorithms are language-agnostic. That means they work the same for all written languages without any knowledge beyond a comprehensive list of possible characters. They were designed for multilingual models like Google’s BERT that take just any input from scraping the Internet — hundreds of languages and texts other than human language like computer programs — so that they could be trained without doing complicated linguistics.

Some research shows significant improvements using more language-specific and language-aware tokenizers. [Rust et al. 2021] But building tokenizers that way takes time, money, and expertise. Implementing a universal strategy like BPE or Wordpiece is much cheaper and easier.

However, as a consequence, there is no way to know how many tokens a specific text represents other than to run it through a tokenizer and then count the number of tokens that come out of it. Because the smallest possible subsequence of a text is one letter, you can be sure the number of tokens won’t be larger than the number of characters (minus spaces) plus two.

To get a good estimate, we need to throw a lot of text at our tokenizer and calculate empirically how many tokens we get on average, compared to how many words or characters we input. In the next section, we’ll do some not-very-systematic empirical measurements for all Jina Embeddings v2 models currently available.

Empirical Estimates of Token Output Sizes

For English and German, I used the Unicode text segmentation algorithm (Unicode Standard Annex #29) to get word counts for texts. This algorithm is widely used to select text snippets when you double-click on something. It is the closest thing available to a universal objective word counter.

I installed the polyglot library in Python, which implements this text segmenter:

pip install -U polyglot

To get the word count of a text, you can use code like this snippet:

from polyglot.text import Text

txt = "What is today's weather in Berlin?"

print(len(Text(txt).words))

The result should be 7.

To get a token count, segments of the text were passed to the tokenizers of various Jina Embeddings models, as described below, and each time, I subtracted two from the number of tokens returned.

English

(jina-embeddings-v2-small-en and jina-embeddings-v2-base-en)

To calculate averages, I downloaded two English text corpora from Wortschatz Leipzig, a collection of freely downloadable corpora in a number of languages and configurations hosted by Leipzig University:

- A one-million-sentence corpus of news data in English from 2020 (

eng_news_2020_1M) - A one-million-sentence corpus of English Wikipedia data from 2016 (

eng_wikipedia_2016_1M)

Both can be found on their English downloads page.

For diversity, I also downloaded the Hapgood translation of Victor Hugo’s Les Misérables from Project Gutenberg, and a copy of the King James Version of the Bible, translated to English in 1611.

For each all four texts, I counted the words using the Unicode segmenter implemented in polyglot, then counted the tokens made by jina-embeddings-v2-small-en, subtracting two tokens for each tokenization request. The results are as follows:

| Text | Word count (Unicode Segmenter) |

Token count (Jina Embeddings v2 for English) |

Ratio of tokens to words (to 3 decimal places) |

|---|---|---|---|

eng_news_2020_1M |

22,825,712 | 25,270,581 | 1.107 |

eng_wikipedia_2016_1M |

24,243,607 | 26,813,877 | 1.106 |

les_miserables_en |

688,911 | 764,121 | 1.109 |

kjv_bible |

1,007,651 | 1,099,335 | 1.091 |

The use of precise numbers does not mean this is a precise result. That documents of such different genres would all have between 9% and 11% more tokens than words indicates that you can probably expect somewhere around 10% more tokens than words, as measured by the Unicode segmenter. Word processors often do not count punctuation, while the Unicode Segmenter does, so you can’t expect the word counts from office software to necessarily match this.

German

(jina-embeddings-v2-base-de)

For German, I downloaded three corpora from Wortschatz Leipzig’s German page:

deu_mixed-typical_2011_1M— One million sentences from a balanced mixture of texts in different genres, dating to 2011.deu_newscrawl-public_2019_1M— One million sentences of news text from 2019.deu_wikipedia_2021_1M— One million sentences extracted from the German Wikipedia in 2021.

And for diversity, I also downloaded all three volumes of Karl Marx’s Kapital from the Deutsches Textarchiv.

I then followed the same procedure as for English:

| Text | Word count (Unicode Segmenter) |

Token count (Jina Embeddings v2 for German and English) |

Ratio of tokens to words (to 3 decimal places) |

|---|---|---|---|

deu_mixed-typical_2011_1M |

7,924,024 | 9,772,652 | 1.234 |

deu_newscrawl-public_2019_1M |

17,949,120 | 21,711,555 | 1.210 |

deu_wikipedia_2021_1M |

17,999,482 | 22,654,901 | 1.259 |

marx_kapital |

784,336 | 1,011,377 | 1.289 |

These results have a larger spread than the English-only model but still suggest that German text will yield, on average, 20% to 30% more tokens than words.

English texts yield more tokens with the German-English tokenizer than the English-only one:

| Text | Word count (Unicode Segmenter) |

Token count (Jina Embeddings v2 for German and English) |

Ratio of tokens to words (to 3 decimal places) |

|---|---|---|---|

eng_news_2020_1M |

24243607 | 27758535 | 1.145 |

eng_wikipedia_2016_1M |

22825712 | 25566921 | 1.120 |

You should expect to need 12% to 15% more tokens than words to embed English texts with the bilingual German/English than with the English-only one.

Chinese

(jina-embeddings-v2-base-zh)

Chinese is typically written without spaces and had no traditional notion of “words” before the 20th century. Consequently, the size of a Chinese text is typically measured in characters (字数). So, instead of using the Unicode Segmenter, I measured the length of Chinese texts by removing all the spaces and then just getting the character length.

I downloaded three corpora from the Chinese corpus page at Wortschatz Leipzig:

zho_wikipedia_2018_1M— One million sentences from the Chinese language Wikipedia, extracted in 2018.zho_news_2007-2009_1M— One million sentences from Chinese news sources, collected from 2007 to 2009.zho-trad_newscrawl_2011_1M— One million sentences from news sources that use exclusively traditional Chinese characters (繁體字).

In addition, for some diversity, I also used The True Story of Ah Q (阿Q正傳), a novella by Lu Xun (魯迅) written in the early 1920s. I downloaded the traditional character version from Project Gutenberg.

| Text | Character count (字数) |

Token count (Jina Embeddings v2 for Chinese and English) |

Ratio of tokens to characters (to 3 decimal places) |

|---|---|---|---|

zho_wikipedia_2018_1M |

45,116,182 | 29,193,028 | 0.647 |

zho_news_2007-2009_1M |

44,295,314 | 28,108,090 | 0.635 |

zho-trad_newscrawl_2011_1M |

54,585,819 | 40,290,982 | 0.738 |

Ah_Q |

41,268 | 25,346 | 0.614 |

This spread in token-to-character ratios is unexpected, and especially the outlier for the traditional character corpus merits further investigation. Nonetheless, we can conclude that for Chinese, you should expect to need fewer tokens than there are characters in your text. Depending on your content, you can expect to need 25% to 40% less.

English texts in Jina Embeddings v2 for Chinese and English yielded roughly the same number of tokens as they do in the English-only model:

| Text | Word count (Unicode Segmenter) |

Token count (Jina Embeddings v2 for Chinese and English) |

Ratio of tokens to words (to 3 decimal places) |

|---|---|---|---|

eng_news_2020_1M |

24,243,607 | 26,890,176 | 1.109 |

eng_wikipedia_2016_1M |

22,825,712 | 25,060,352 | 1.097 |

Taking Tokens Seriously

Tokens are an important scaffolding for AI language models, and research is ongoing in this area.

One of the places where AI models have proven revolutionary is the discovery that they are very robust against noisy data. Even if a particular model does not use the optimal tokenization strategy, if the network is large enough, given enough data, and adequately trained, it can learn to do the right thing from imperfect input.

Consequently, much less effort is spent on improving tokenization than in other areas, but this may change.

As a user of embeddings, who buys them via an API like Jina Embeddings, you can’t know precisely how many tokens you’ll need for a specific task and may have to do some testing of your own to get solid numbers. But the estimates provided here — circa 110% of the word count for English, circa 125% of the word count for German, and circa 70% of the character count for Chinese — should be enough for basic budgeting.